It’s extremely common for a corporate website to require integration with some sort of external data. In my work, I have encountered variations in the ways that data gets pulled-in, and the ways it gets used on-site.

An important thing to remember about Umbraco is that when a site is architected properly, it works seamlessly with anything you can access through some sort of common interface – basically anything that ASP.Net can handle, Umbraco can utilize.

I’ve found that there are three important questions to consider when designing your integration setup.

1. Where is the data coming from?

When you are looking at an integration need, the first thing to consider is: Where is the data coming from? The data might be available via an API (for instance, using REST), or it might be provided in a file of some sort, such as an Excel sheet.

I have worked with both types of external data – provided via API and via file imports. Other than making sure that the data is accessible to the website code, the differences aren’t that important. The more important issue to consider is whether the data will be available via “push” or “pull”. The answers to these follow-up questions can help pinpoint that: How frequently will the data be updated? Who will be providing the updates? How will the system “know” when the data is updated?

For example, I worked on two different projects where data would be provided in a “file import” format - specifically CSV. In one of the projects, the third-party data provider (a financial institution) was set up to upload a daily data file to a certain FTP server, which the website could also access. At first glance, this might seem like a “push” situation – except that it isn’t exactly. The third-party wasn’t pushing the data file to the website directly – but rather an intermediate location which the website needed to pull from. To handle this, we created a webapi which could be run at scheduled intervals. The code would download the designated file (they had a standard filename format which included the date) temporarily store it locally, then process the data via a CSV import routine. For another project, the data was being provided by people in a different department of the company. The information might be ready for import at various times, so those people were empowered with access to upload functionality, so the code would run at the time they provided the upload – this is what I would suggest is a “push” operation. Understanding push & pull is helpful when considering what sort of interface or automations are needed.

2. What is the best way to access/store it?

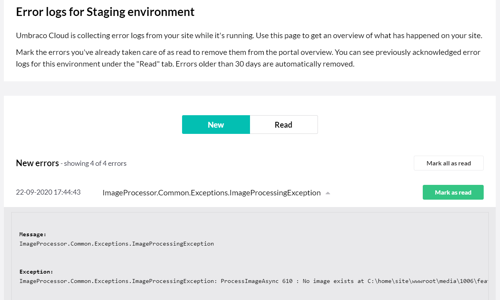

Once you know how you are getting the data, the next step is to determine how you will store it, and in what format. By the way, in general, I do recommend some sort of local storage. Even if you are pulling the data via an always-available unlimited-access api call (does such a thing even exist?) you will likely find that when it comes time to use and display that data, pulling from a local data store will be much more performant (not to mention, cache-able). Additionally, many APIs have access limitations (the number of times per day/hour/minute/whatever that you make a request to the api). And, let’s not forget that services go down and networks can be unreliable. If you have a locally-stored copy of the data, you can fall back to using it, rather than showing nothing – or worse, some sort of error.

How you store the data will partly be determined based on usage (see question #3), but it also is based on the size of the data being stored and how long the data will have value. Will it be kept “forever” as historical record, or is the data only useful in its most current form? Some possible storage options: In custom database tables, in Umbraco content nodes, or as local files.

For one project, we are pulling a massive product data set from the GDSN database via a third-party API. We only need to use a small subset of all the possible data included in the API response, so on a schedule, we would make the request to the API and process the data, converting the desired information into a smaller, more useful product object, which was then serialized to JSON and saved in a text file in the website file system. These files are easy-to-access, and quick to read for on-page display.

3. How will it be used?

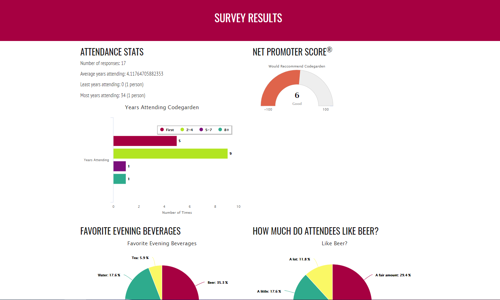

The final question might seem like the obvious one in many ways, but it pays to dig a bit into the details, especially since usage will often impact your final storage decisions. Will the data be displayed all together on a single page, or should there be separate website pages for each part of the data? Will the data be displayed as-is, used to create a dynamic chart, or mixed-in with CMS-provided content on a page? By understanding the specific usage required, the right technical strategy can be put in place.

I hope these three questions help you begin to plan your data integrations. Of course, these are just the first layer of considerations, and other things will also need to be addressed, like: Will the structure of the data ever change? How should missing data be handled? How will the quantity of the data change over time – expanding, contracting, or staying about the same?

If you have an Umbraco project with data integration needs, I’d be happy to discuss it with you - just schedule an Umbraco Discovery call.